Deploying Large Language Models on Android

Train and Deploy your own LLMs using KerasNLP and Tensor Flow lite

In this blog, I am going to talk about what Large Language Models are and how they can be used to generate texts, translate languages, and answer questions. I will then dive into how to use KerasNLP to train Large Language models and use TensorFlow lite to optimise the trained model which can then be deployed on Android.

What are Large Language Models?

Large Language Models are machine learning models which are trained on large data sets. These models are usually used for natural language processing tasks such as text generation, answering questions, language translations etc.

Large Language Models use Transformer Architecture which was introduced by Google Research in 2017 through the paper "Attention is all you need". Transformer Architecture introduces a new concept known as the 'attention mechanism'.

The attention mechanism is a way for language models to pay close attention to different parts of a sentence or document while processing it. It works similar to how we humans pay attention to specific words or phrases when understanding a sentence. This mechanism helps language models solve the problem of long-range dependencies, where words far apart in a sentence need to influence each other's meaning. By attending to relevant parts of the input, the attention mechanism enables the model to better understand and generate coherent and meaningful responses.

Let's take an example of predicting the next word in a sentence.

Sentence: "After working tirelessly for hours, he finally solved the ___________."

In this example, the attention mechanism can give more weight to the phrase "working tirelessly" and the word "solved" while predicting the next word. The context indicates that the person worked hard and eventually achieved a resolution or solution. The attention mechanism can emphasize these relevant words and phrases, allowing the model to predict the appropriate word that aligns with the context. For instance, the model might predict the next word as a "problem" or "puzzle" based on the attention to the given context, highlighting the efforts and eventual success of the person in resolving something.

This is a very high-level overview of Large Language Models and how they use attention mechanisms in natural language processing tasks. Let's move on to KerasNLP and find a model to train.

KerasNLP

Large language models are complex to build and expensive to train from scratch. KerasNLP is a natural language processing library that offers state-of-the-art pre-trained models for free.

Let's load a pre-trained GPT 2 model and generate some texts.

gpt2_tokenizer = keras_nlp.models.GPT2Tokenizer.from_preset("gpt2_base_en")

gpt2_preprocessor = keras_nlp.models.GPT2CausalLMPreprocessor.from_preset(

"gpt2_base_en",

sequence_length=256,

add_end_token=True,

)

gpt2_lm = keras_nlp.models.GPT2CausalLM.from_preset("gpt2_base_en", preprocessor=gpt2_preprocessor)

start = time.time()

output = gpt2_lm.generate("My trip to Yosemite was", max_length=200)

print("\nGPT-2 output:")

print(output.numpy().decode("utf-8"))

end = time.time()

print("TOTAL TIME ELAPSED: ", end - start)

GPT-2 model is hierarchically broken down into several modules in KeraNLP, all of which have a from_preset() function that loads a pre-trained model. Let's go through each of them one by one.

GPT2Tokenizer

The tokenizer converts your text input into token IDs. There are different kinds of tokenizers; GPT-2 model uses Byte-Pair Encoding (BPE).

GPT2CausalLMPreprocessor

The preprocessor does additional processing, e.g., padding the tensor of token IDs to a specified length, after tokenization is finished.

GPT2Backbone

This is where the neural network is built. GPT-2 implements Transformer based decoder network.

GPT2CausalLM

This wraps GPT2Backbone and multiplies the output of the GPT2 backbone by embedding matrix to generate logits over vocab token.

TensorFlow Lite

The model which we are using to generate text runs on a powerful GPU on Cloud. How can we leverage it on a mobile device?

An obvious solution would be to create an API and then call the endpoint in your mobile application. But how do you run the model purely on the device? This is where TensorFlow Lite comes into the picture. TensorFlow lite is a mobile library used for deploying models on mobile, microcontrollers and other edge devices.

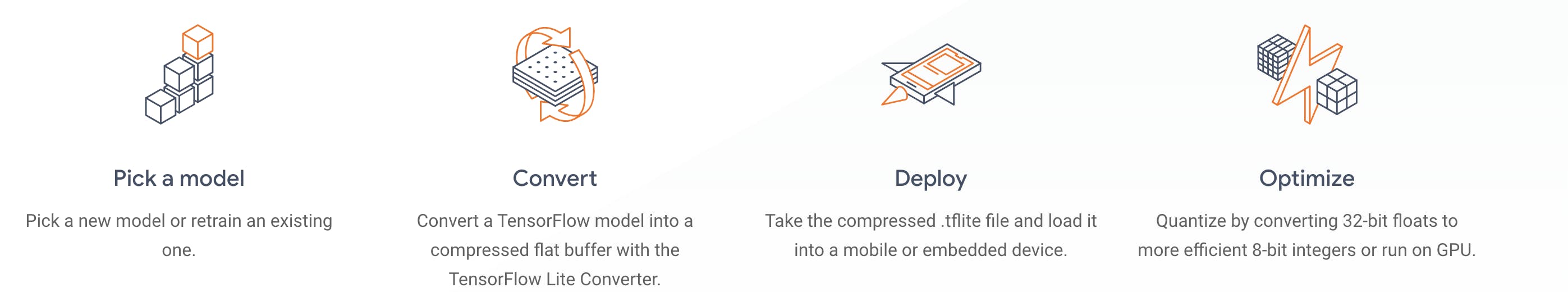

Below is the diagram showing how tensorflow lite works. Let us now fit this into our example.

- Pick our pre-trained model from KerasNLP

The generate() function from GPT2CausalLM is the function which uses the GPT-2 model and generates the text for us. So we will wrap this function into Tensor Flow concrete function.

@tf.function

def generate(prompt, max_length):

return gpt2_lm.generate(prompt, max_length)

concrete_func = generate.get_concrete_function(tf.TensorSpec([], tf.string), 100)

def run_inference(input, generate_tflite):

interp = interpreter.InterpreterWithCustomOps(

model_content=generate_tflite,

custom_op_registerers=tf_text.tflite_registrar.SELECT_TFTEXT_OPS)

interp.get_signature_list()

generator = interp.get_signature_runner('serving_default')

output = generator(prompt=np.array([input]))

print("\nGenerated with TFLite:\n", output["output_0"])

- Convert it into a flat buffer with TensorFlow Lite Converter.

gpt2_lm.jit_compile = False

converter = tf.lite.TFLiteConverter.from_concrete_functions([concrete_func],

gpt2_lm)

converter.target_spec.supported_ops = [

tf.lite.OpsSet.TFLITE_BUILTINS, # enable TensorFlow Lite ops.

tf.lite.OpsSet.SELECT_TF_OPS # enable TensorFlow ops.

]

converter.allow_custom_ops = True

converter.target_spec.experimental_select_user_tf_ops = ["UnsortedSegmentJoin", "UpperBound"]

converter._experimental_guarantee_all_funcs_one_use = True

generate_tflite = converter.convert()

run_inference("I'm enjoying a", generate_tflite)

with open('unquantized_gpt2.tflite', 'wb') as f:

f.write(generate_tflite)

Take the compressed .tfllite file and load it into our mobile application.

Further Optimization by converting 32-bit floats to more efficient 8-bit integers.

gpt2_lm.jit_compile = False

converter = tf.lite.TFLiteConverter.from_concrete_functions([concrete_func],

gpt2_lm)

converter.target_spec.supported_ops = [

tf.lite.OpsSet.TFLITE_BUILTINS, # enable TensorFlow Lite ops.

tf.lite.OpsSet.SELECT_TF_OPS # enable TensorFlow ops.

]

converter.allow_custom_ops = True

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.experimental_select_user_tf_ops = ["UnsortedSegmentJoin", "UpperBound"]

converter._experimental_guarantee_all_funcs_one_use = True

quant_generate_tflite = converter.convert()

run_inference("I'm enjoying a", quant_generate_tflite)

with open('quantized_gpt2.tflite', 'wb') as f:

f.write(quant_generate_tflite)

Finally we have a .tflite file which we need to inject in our android application and call the generate function and our LLM is up and running on our Android device.

You can try the steps in the colab here.

So go ahead, unravel the possibilities and redefine what's achievable with a Large Language Model on your Android device. Happy deploying!